From my experience as a Masters student in med tech, it can be difficult to understand the wider impact of your research and its potential for being translated into a usable tool for clinicians. This can be frustrating, particularly if you, like me, are more drawn to the potential applications of your work, as opposed to simply its scientific novelty.

With this in mind, I’ve decided to have a go at creating a ‘Translational Research’ – or TR – Index. The idea is very simple – score a potential research project based on a handful of key criteria which serve as indicators of translational potential. In doing so, we could achieve a couple of important insights:

- Understand the kind of research project being proposed

- Direct funding to appropriate places

- Direct resources (i.e. staff, equipment, etc.) to appropriate places

- Establish incentives early on and influence outcomes

- Influence recruitment

- Establish appropriate relationships, joint ventures, partnerships etc. based on incentives and outcomes

Much like an investor screening through stocks which meet a pre-defined set of variables, we could theoretically score projects using the TR index and then screen through projects which satisfy the specific translational criteria we are hoping to meet. This not only gives us an idea of how high the translational potential of a project might be but also allows us to possibly screen various projects based on specific goals (i.e. knowledge expansion, commercialisation, IP generation, etc.).

The TR Index could be defined by the following scoring system, resulting in a ‘Translational Value Potential (TVP):

- Hypothesis (45 points)

- Hypothesis tested by fast, frugal experimentation based on simplicity, feasibility and clinic/market relevancy (45)

- Hypothesis tested by experimentation based on clinic/market relevancy (23)

- Hypothesis tested by any experimental parameters necessary to further knowledge, without regard to clinic/market application (0)

- Involvement of end-user (25 points)

- End-user directly involved in project design and testing (25)

- End-user indirectly involved via a partnership, joint venture, etc. (12)

- End-user has no involvement in the project design (0)

- Timeframe (5 points)

- Measurable timeframe to achieve specific, ‘usable’ deliverable (5)

- No time timeframe to achieve specific, ‘usable’ deliverable (0)

- Scalability (20 points)

- Synthesis process can currently be implemented at scale (20)

- With reasonable development, synthesis process can eventually implemented at scale (10)

- By definition, synthesis process unable to be scaled (0)

- Intellectual Property (5 points)

- Project generates entirely new IP (5)

- Project builds on prior IP (0)

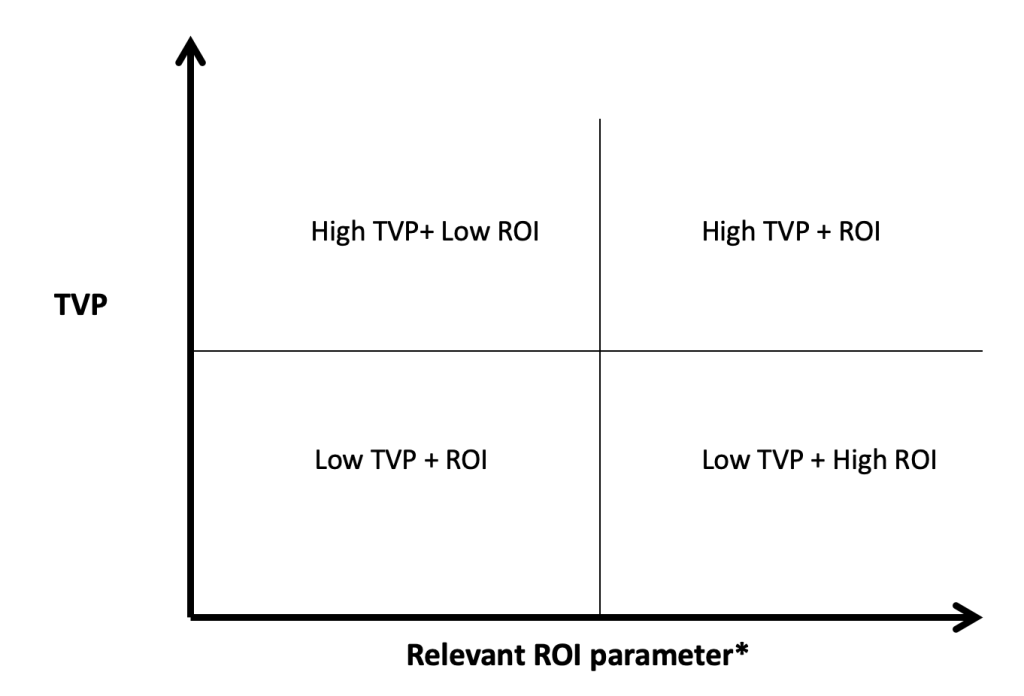

The TVP would then be summed out of 100 and reported as a percentage. We could then actually add the TVP to a relevant ROI parameter, to give further insight into the actual economic (or even social) returns on the project.

Some possible ROI parameters might include:

- Estimated economic ROI

- Estimated external investment into the project as % of project costs

- Estimated income from/value of consultancy contracts as a % of project costs

- Estimated income from/value of licenses generated as a % of project costs

- Estimated revenue/market value of active spin-outs or start-ups created as a % of project costs

The ROI parameter might change with the goals of the project or the field it’s in. For example, a project focused on delivering value to patients might focus on objective evidence of health improvements in outputs such as reduced deaths, hospital admissions or attendances at health clinics.

I will use another post to demonstrate the application of the TR index to an existing research project, but for now, I thought it might be useful to list the (many) improvements needed to make this index into a practical tool, as it is still a work in progress after all!

- Clearly, the index will be far more useful if it is modified to apply to different fields – translational potential in the semiconductor industry is defined very different to how it might be in med tech, etc.. I will look at making indexes specific to different fields if there is demand for something like this.

- It can be challenging to bridge the gap between novelty for the sake of furthering knowledge and creating something ‘useful’ that can be applied – both are important. We need to ensure we aren’t ‘discouraging’ the development of complex, novel innovations which might take longer to produce but still might provide us with great value (whether academic or translational). As such, the index should merely serve as an indicator of early project incentives and potential outcomes as opposed to a tool for ‘ruling out’ any projects with too long a timeframe or too complicated an agent. How can the index be improved to ensure we don’t ‘discourage’ against the more complex scientific innovations?

- I have included involvement by the end-user as part of the criteria. To me, it is critical that the end-user play at least some role in the development of a project which is meant to deliver them with a new innovation. Like a start-up founder thoroughly understanding market trends before pursuing a business idea, I believe a research team focused on creating a usable innovation should think very carefully about who the ‘consumer’ of that innovation will be. At the same time, I understand that these projects can be inherently complex and perhaps at a stage where it might be difficult or next to impossible to even consider the end-user. Perhaps it would be worth thinking about how we might modify the index for these kinds of projects.

- The words ‘frugal’, ‘fast’ and ‘feasible’ and ‘simple’ need to be further defined and perhaps even weighted to introduce further scoring into the Hypothesis criteria. Again, the way these metrics are defined is very likely to change by the field we are considering.

Next steps regarding the index will include plenty more research as to how R+D projects are currently planned and evaluated within academia and industry. Some useful resources I’ve found include:

- Rates of Return to Investment in Science and Innovation (2014), by Frontier Economics

- Metrics for the Evaluation of Knowledge Transfer Activities at Universities (2007?), by Library House

- A Multi-Criteria Decision Support System for R&D Project Selection (1991), written by Theodor Stewart at the University of Cape Town

I also hope to eventually get feedback from someone at a Research Council or university and use it to further refine the index based on their needs. Finally, I’m going to try and address some of the points I mentioned above to try to further improve the index.